Towards Safer Navigation - Reward Shaping with Prior Topographic Knowledge

Enhancing navigation safety in deep reinforcement learning agents through reward shaping with prior map information

Motivation

Modular navigation is reliable yet rigid; end-to-end RL is flexible but often unsafe near obstacles. We aim to train agents that not only reach goals but also maintain human-like safety margins during navigation.

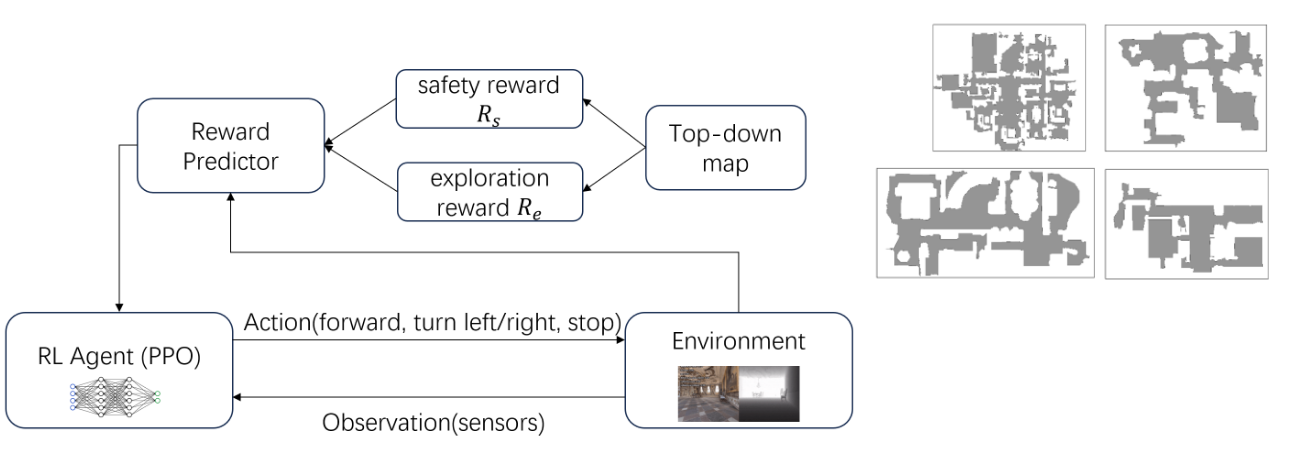

Method Overview

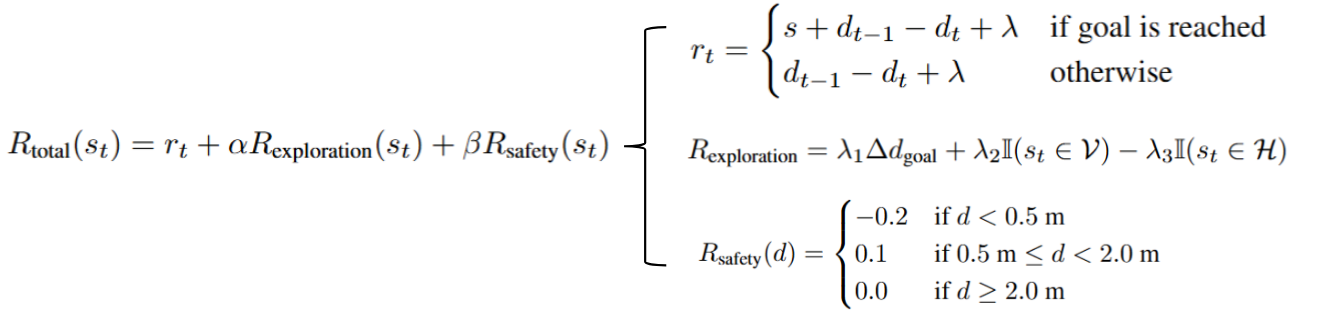

We introduce safety-aware reward shaping using prior topographic knowledge to form a continuous “safety gradient” rather than a binary collision penalty. The agent is penalized in a near-obstacle “Penalty Zone” and rewarded in a “Safety Zone” that preserves a sensible buffer, balancing progress and safety. Training uses PPO with multi-modal inputs (RGB, depth, GPS/compass) for robust perception and control.

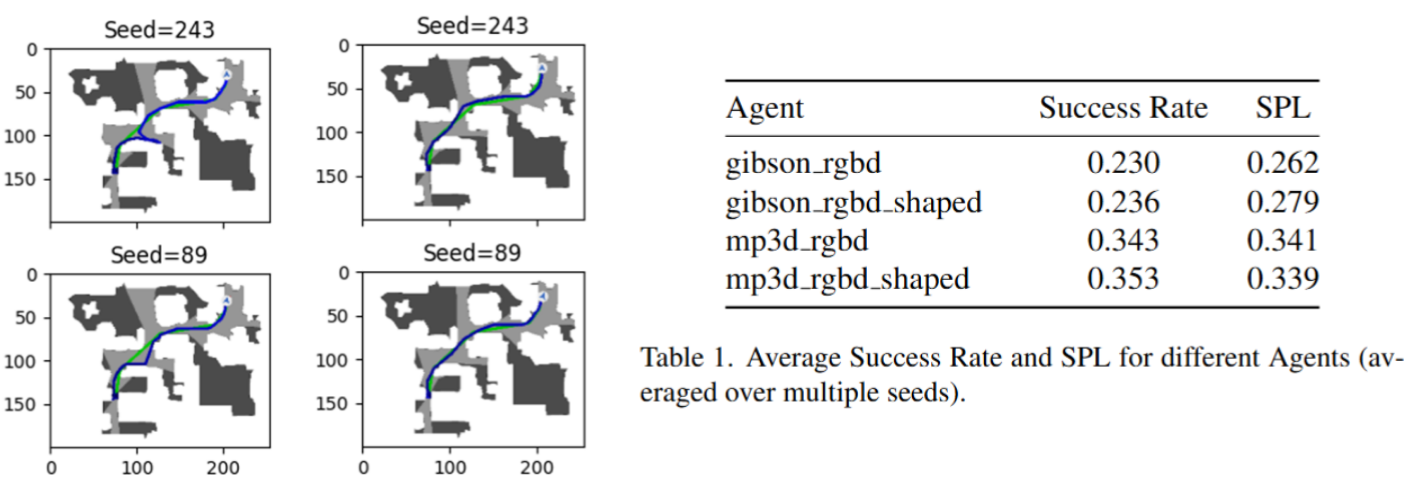

Results

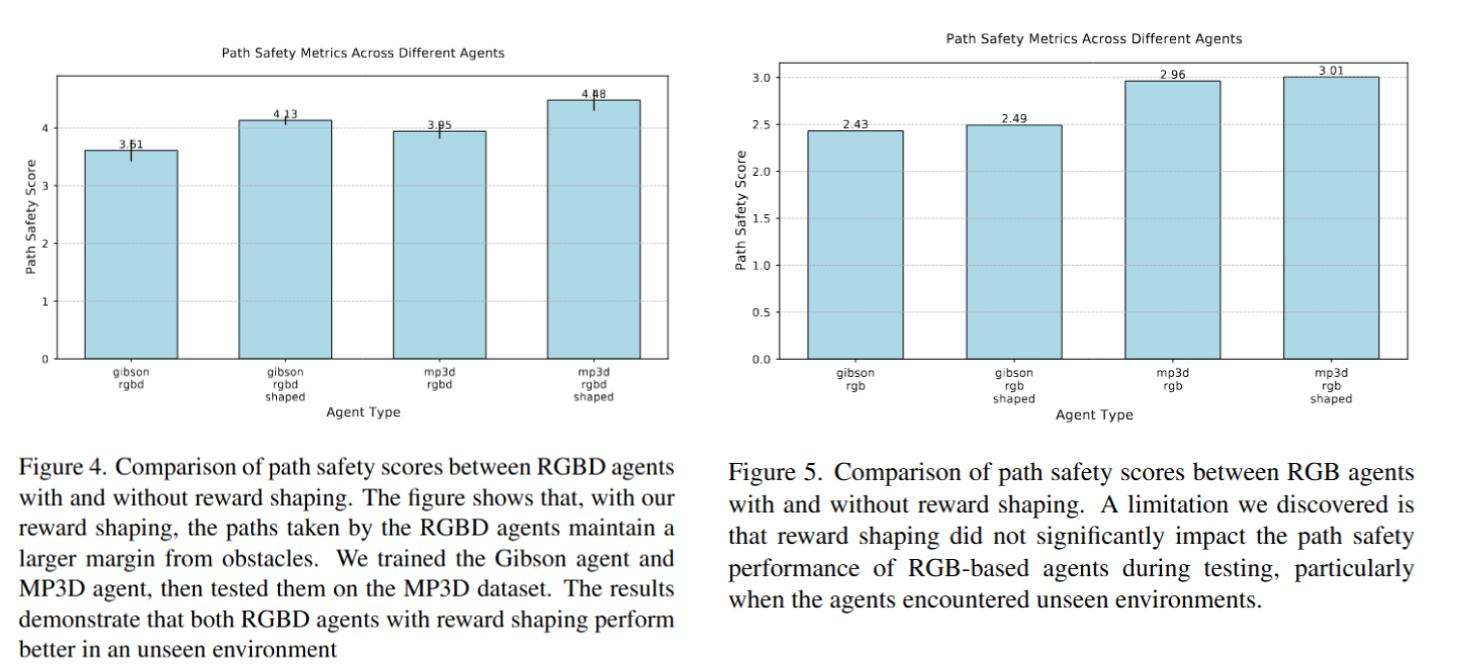

We train and evaluate in Habitat on MP3D and Gibson. Beyond Success and SPL, we measure Path Safety (average distance to nearest obstacle):

- Path Safety: +6 cm over baseline

- Success / SPL: Competitive with baseline while improving safety

- Training: Converges in ~5M steps (≈10 hours); stable across runs; improved sample efficiency

Tech Stack

- Deep Learning Framework: PyTorch 1.9+

- RL Algorithm: Proximal Policy Optimization (PPO)

- Simulation: Habitat-Sim, Habitat-Lab

- Computer Vision: OpenCV, PIL

- Neural Networks: ResNet18, LSTM

- Environment: Python 3.8+, CUDA 11.2

- Visualization: Matplotlib, TensorBoard

Next Steps

Scale up training, refine reward shaping, and transfer to real robots. Extend to dynamic environments with moving obstacles and explore multi-agent coordination for safe, efficient navigation.

Project Impact

This research contributes to the field of safe autonomous navigation by:

- Demonstrating effective integration of prior knowledge in RL

- Providing a novel safety-aware reward shaping approach

- Establishing new evaluation metrics for navigation safety

- Bridging the gap between simulation and real-world deployment

Project Team

- Lead Researcher: Jiajie Zhang (zhangjj2023@shanghaitech.edu.cn)

- Advisor: Professor Sören Schwertfeger

- Institution: MARS Lab, ShanghaiTech University

Related Resources

- Project Slides: Deep Learning Project Defense

- Technical Report: Detailed Project Report

- Code Repository: GitHub Repository

This project marks a significant step towards creating safer, more reliable autonomous agents by fundamentally rethinking how they learn to navigate, bridging the gap between task completion and real-world practicality.