Publications

Intellectual fearlessness, follow curiosity.

2025

-

From Observation to Action: Latent Action-based Primitive Segmentation for VLA Pre-training in Industrial SettingsJiajie Zhang+, Sören Schwertfeger, and Alexander KleinerarXiv preprint arXiv:2511.21428, 2025

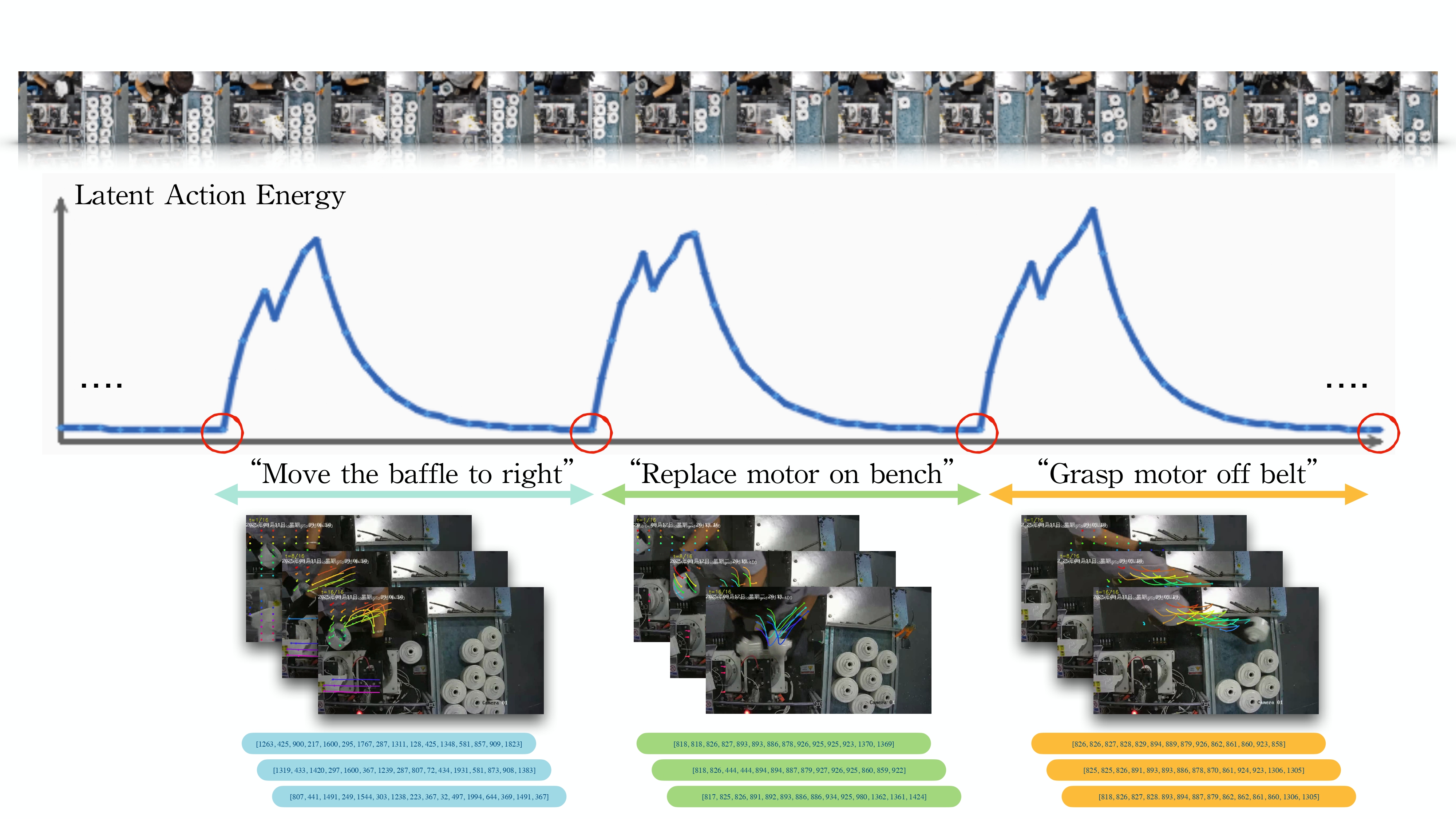

From Observation to Action: Latent Action-based Primitive Segmentation for VLA Pre-training in Industrial SettingsJiajie Zhang+, Sören Schwertfeger, and Alexander KleinerarXiv preprint arXiv:2511.21428, 2025We present a novel unsupervised framework to unlock vast unlabeled human demonstration data from continuous industrial video streams for Vision-Language-Action (VLA) model pre-training. Our method first trains a lightweight motion tokenizer to encode motion dynamics, then employs an unsupervised action segmenter leveraging a novel "Latent Action Energy" metric to discover and segment semantically coherent action primitives. The pipeline outputs both segmented video clips and their corresponding latent action sequences, providing structured data directly suitable for VLA pre-training. Evaluations on public benchmarks and a proprietary electric motor assembly dataset demonstrate effective segmentation of key tasks performed by humans at workstations. Further clustering and quantitative assessment via a Vision-Language Model confirm the semantic coherence of the discovered action primitives. To our knowledge, this is the first fully automated end-to-end system for extracting and organizing VLA pre-training data from unstructured industrial videos, offering a scalable solution for embodied AI integration in manufacturing.

-

Generation of Indoor Open Street Maps for Robot Navigation from CAD FilesJiajie Zhang+, Shenrui Wu, Xu Ma, and 1 more authorarXiv preprint arXiv:2507.00552, 2025

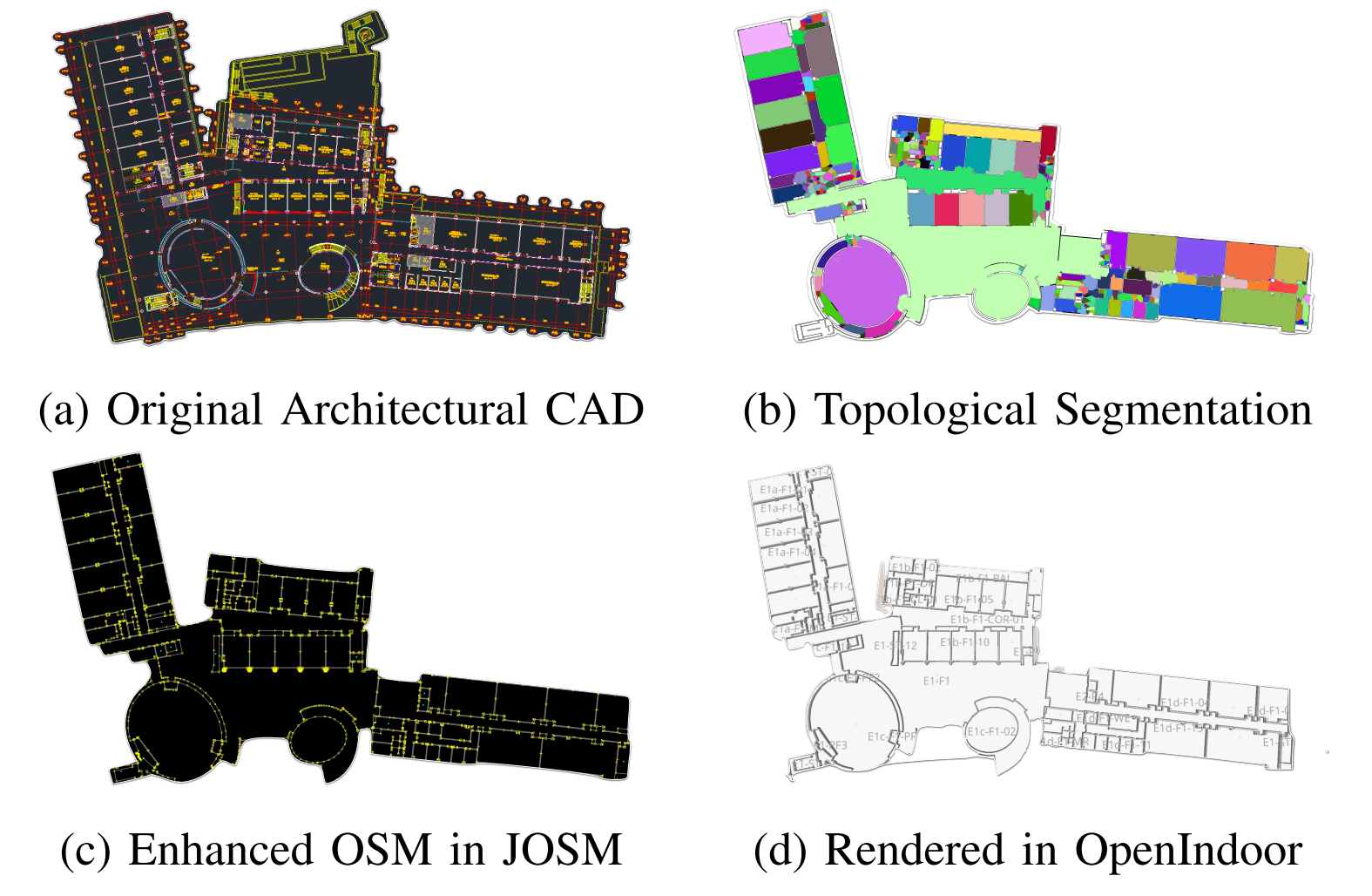

Generation of Indoor Open Street Maps for Robot Navigation from CAD FilesJiajie Zhang+, Shenrui Wu, Xu Ma, and 1 more authorarXiv preprint arXiv:2507.00552, 2025The deployment of autonomous mobile robots is predicated on the availability of environmental maps, yet conventional generation via SLAM (Simultaneous Localization and Mapping) suffers from significant limitations in time, labor, and robustness, particularly in dynamic, large-scale indoor environments where map obsolescence can lead to critical localization failures. To address these challenges, this paper presents a complete and automated system for converting architectural Computer-Aided Design (CAD) files into a hierarchical topometric OpenStreetMap (OSM) representation, tailored for robust life-long robot navigation. Our core methodology involves a multi-stage pipeline that first isolates key structural layers from the raw CAD data and then employs an AreaGraph-based topological segmentation to partition the building layout into a hierarchical graph of navigable spaces. This process yields a comprehensive and semantically rich map, further enhanced by automatically associating textual labels from the CAD source and cohesively merging multiple building floors into a unified, topologically-correct model. By leveraging the permanent structural information inherent in CAD files, our system circumvents the inefficiencies and fragility of SLAM, offering a practical and scalable solution for deploying robots in complex indoor spaces. The software is encapsulated within an intuitive Graphical User Interface (GUI) to facilitate practical use.

-

Intelligent LiDAR navigation: Leveraging external information and semantic maps with LLM as copilotFujing Xie+, Jiajie Zhang, and Sören SchwertfegerIEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2025

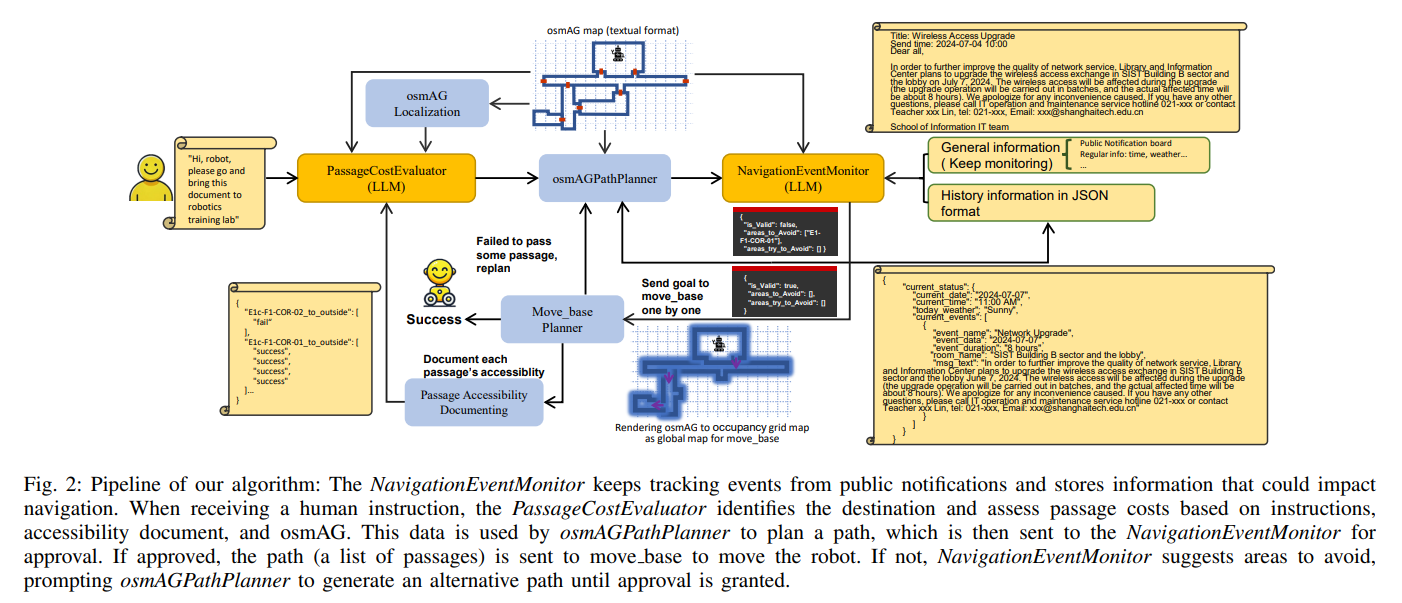

Intelligent LiDAR navigation: Leveraging external information and semantic maps with LLM as copilotFujing Xie+, Jiajie Zhang, and Sören SchwertfegerIEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2025Traditional robot navigation systems primarily utilize occupancy grid maps and laser-based sensing technologies, as demonstrated by the popular move_base package in ROS. Unlike robots, humans navigate not only through spatial awareness and physical distances but also by integrating external information, such as elevator maintenance updates from public notification boards and experiential knowledge, like the need for special access through certain doors. With the development of Large Language Models (LLMs), which possesses text understanding and intelligence close to human performance, there is now an opportunity to infuse robot navigation systems with a level of understanding akin to human cognition. In this study, we propose using osmAG (Area Graph in OpensStreetMap textual format), an innovative semantic topometric hierarchical map representation, to bridge the gap between the capabilities of ROS move_base and the contextual understanding offered by LLMs. Our methodology employs LLMs as an actual copilot in robot navigation, enabling the integration of a broader range of informational inputs while maintaining the robustness of traditional robotic navigation systems.

-

WiFi-based Global Localization in Large-Scale Environments Leveraging Structural Priors from osmAGXu Ma+, Jiajie Zhang, Fujing Xie, and 1 more authorarXiv preprint arXiv:2508.10144, 2025

WiFi-based Global Localization in Large-Scale Environments Leveraging Structural Priors from osmAGXu Ma+, Jiajie Zhang, Fujing Xie, and 1 more authorarXiv preprint arXiv:2508.10144, 2025Global localization is essential for autonomous robotics, especially in indoor environments where the GPS signal is denied. We propose a novel WiFi-based localization framework that leverages ubiquitous wireless infrastructure and the OpenStreetMap Area Graph (osmAG) for large-scale indoor environments. Our approach integrates signal propagation modeling with osmAG’s geometric and topological priors. In the offline phase, an iterative optimization algorithm localizes WiFi Access Points (APs) by modeling wall attenuation, achieving a mean localization error of 3.79 m (35.3% improvement over trilateration). In the online phase, real-time robot localization uses the augmented osmAG map, yielding a mean error of 3.12 m in fingerprinted areas (8.77% improvement over KNN fingerprinting) and 3.83 m in non-fingerprinted areas (81.05% improvement). Comparison with a fingerprint-based method shows that our approach is much more space efficient and achieves superior localization accuracy, especially for positions where no fingerprint data are available. Validated across a complex 11,025 &m^2& multi-floor environment, this framework offers a scalable, cost-effective solution for indoor robotic localization, solving the kidnapped robot problem.

2024

-

Neural Surfel Reconstruction: Addressing Loop Closure Challenges in Large-Scale 3D Neural Scene MappingJiadi Cui+, Jiajie Zhang+, Laurent Kneip, and 1 more authorSensors (Basel, Switzerland), 2024

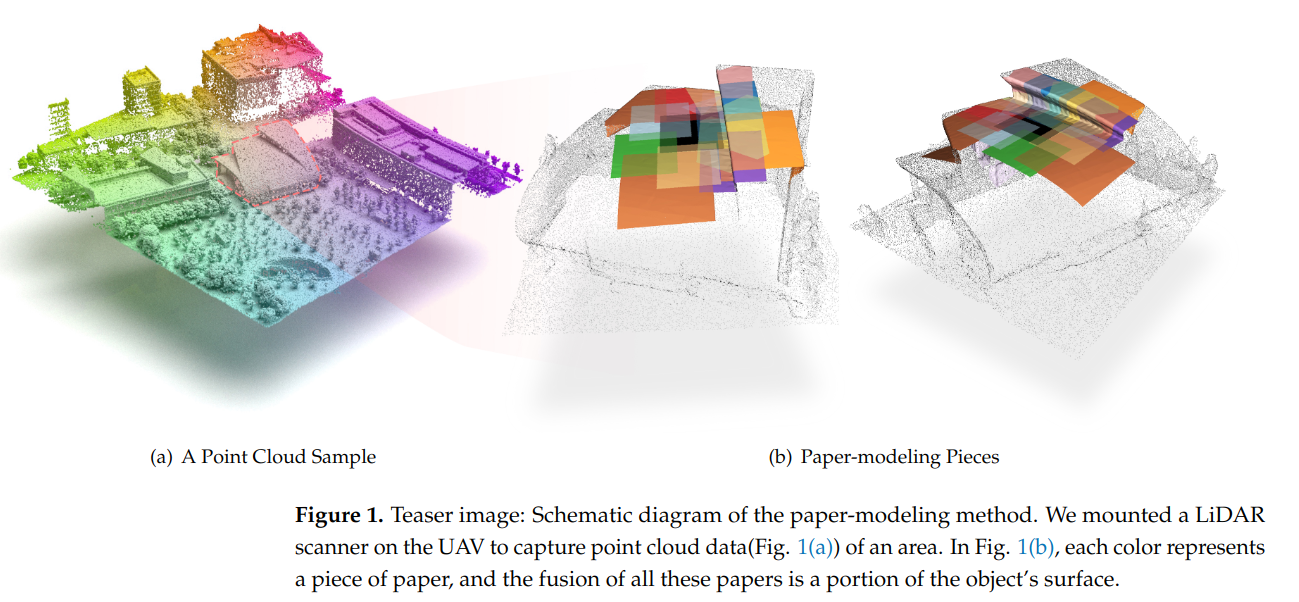

Neural Surfel Reconstruction: Addressing Loop Closure Challenges in Large-Scale 3D Neural Scene MappingJiadi Cui+, Jiajie Zhang+, Laurent Kneip, and 1 more authorSensors (Basel, Switzerland), 2024Efficiently reconstructing complex and intricate surfaces at scale remains a significant challenge in 3D surface reconstruction. Recently, implicit neural representations have become a popular topic in 3D surface reconstruction. However, how to handle loop closure and bundle adjustment is a tricky problem for neural methods, because they learn the neural parameters globally. We present an algorithm that leverages the concept of surfels and expands relevant definitions to address such challenges. By integrating neural descriptors with surfels and framing surfel association as a deformation graph optimization problem, our method is able to effectively perform loop closure detection and loop correction in challenging scenarios. Furthermore, the surfel-level representation simplifies the complexity of 3D neural reconstruction. Meanwhile, the binding of neural descriptors to corresponding surfels produces a dense volumetric signed distance function (SDF), enabling the mesh reconstruction. Our approach demonstrates a significant improvement in reconstruction accuracy, reducing the average error by 16.9% compared to previous methods, while also generating modeling files that are up to 90% smaller than those produced by traditional methods.